A Framework for Filtering ‘Shiny Object’ Syndrome in Enterprise Stacks

The Technical Thesis: In 2026, the true cost of technology adoption is no longer the license fee or the seat price, it’s the Cognitive Load and System Entropy introduced by unvetted abstractions. To maintain velocity, enterprises must pivot from “Latest-is-Best” to a “Fundamentals-First” rubric, ensuring that every new tool earns its place in the architecture.

At Hoyack, we see it daily: companies paralyzed by a fragmented stack of AI tools that promised “efficiency” but delivered “maintenance debt.” Here is our framework for filtering the noise.

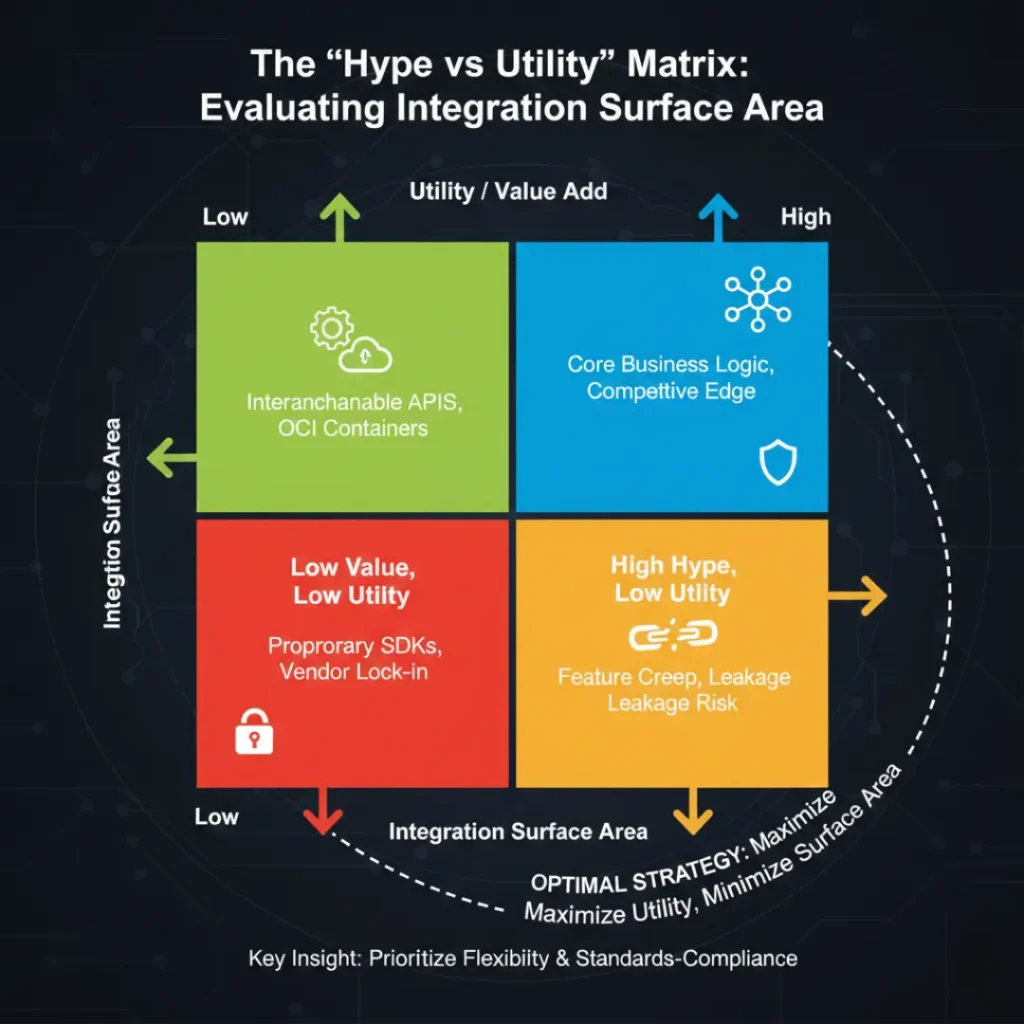

1. The ‘Hype vs. Utility’ Matrix: Evaluating Integration Surface Area

When a new AI framework hits the market, the first question shouldn’t be “What can it do?” but rather “How much of our core logic does it touch?”

We evaluate tools based on their Integration Surface Area:

- The Leakage Risk: Does this tool require a proprietary SDK that forces its way into our core business logic? (e.g., vendor-specific decorators or custom data types).

- The Interchangeable Ideal: Is it an interchangeable API or an OCI-compliant container?

The Hoyack Rule: If a framework requires you to rewrite your core Service layer to fit its “opinionated” workflow, you aren’t adopting a tool, you’re signing a long-term lease on technical debt.

2. Quantifying System Entropy: Feature Code vs. Glue Code

System entropy is the measure of disorder in your stack. In the rush to implement AI, many teams inadvertently increase entropy by writing massive amounts of “Glue Code”, the fragile scripts required to make Tool A talk to Tool B.

The Metric to Track: Keep a close eye on the ratio of Feature Code (code that delivers actual value to your users) to Glue Code (code that manages infrastructure and tool integration).

- Healthy Stack: 80% Feature / 20% Glue.

- At-Risk Stack: < 50% Feature. When more than half your sprint is spent fixing “the plumbing” between AI abstractions, your “Shiny Object” has officially become a bottleneck.

3. The ‘Boring Technology’ Moat

The most successful AI experiments don’t happen in a vacuum; they happen on the shoulders of stable, “boring” giants. By building your foundation on PostgreSQL, Linux, and Standard Web APIs, you create a resilient “Critical Path.”

- Stability at the Core: Your primary database and auth layers should be predictable.

- Agility at the Periphery: Use “boring” infrastructure to host your AI experiments. If an experimental LLM framework fails or becomes obsolete, you can swap it out at the periphery without the entire system collapsing.

This approach allows for Faster Experimentation because the stakes of failure are localized, not systemic.

4. The “No-Ship” Decision: A Case Study in SLIs

One of the most valuable services we provide at Hoyack is knowing when not to ship a popular tool.

The Scenario: A client recently pushed to adopt a trending AI-orchestration framework. The Technical Audit: Our team measured the Service Level Indicators (SLIs) and found that the framework’s abstraction layer added an average of 200ms of latency to the request-response cycle. The Verdict: For a high-frequency retail platform, 200ms is the difference between a conversion and a bounce. We advised a “No-Ship” decision for that specific framework, opting instead for a lightweight, custom-built wrapper that maintained the client’s strict performance budget.

Build a Stack That Scales, Not One That Breaks

Don’t let “Shiny Object” syndrome turn your enterprise into a graveyard of abandoned AI tools. At Hoyack, our U.S.-based engineering teams prioritize clean architecture and long-term ROI over passing trends. We help you audit your current stack, eliminate entropy, and implement AI that actually moves the needle.

Schedule a Consultation with Hoyack to Audit Your Enterprise Stack